Google has launched its seventh-generation AI processor, Ironwood, designed to enhance AI application performance, particularly in “inference” computing, which involves quick calculations for chatbot answers and other AI outputs.

According to Google Vice President Amin Vahdat, Ironwood combines functionalities from past designs while increasing memory capacity. As a Tensor Processing Unit (TPU), Ironwood is engineered for fast AI calculations. The chip can operate in groups of up to 9,216 processors, delivering twice the performance-per-energy ratio compared to the previous generation, Trillium. When configured in pods of 9,216 chips, Ironwood achieves 42.5 Exaflops of computing power, significantly surpassing the computational capacity of El Capitan, currently the world’s largest supercomputer, which provides only 1.7 Exaflops per pod.

Ironwood’s performance is highlighted by several key features. It offers twice the performance-per-watt relative to Trillium, Google’s sixth-generation TPU announced last year. The chip boasts 192 GB of memory per chip, six times that of Trillium. Additionally, Ironwood features improved High-Bandwidth Memory (HBM) bandwidth, reaching 7.2 Tbps per chip, which is 4.5 times that of Trillium. The Inter-Chip Interconnect (ICI) bandwidth has also been enhanced, increased to 1.2 Tbps bidirectional, 1.5 times that of Trillium’s.

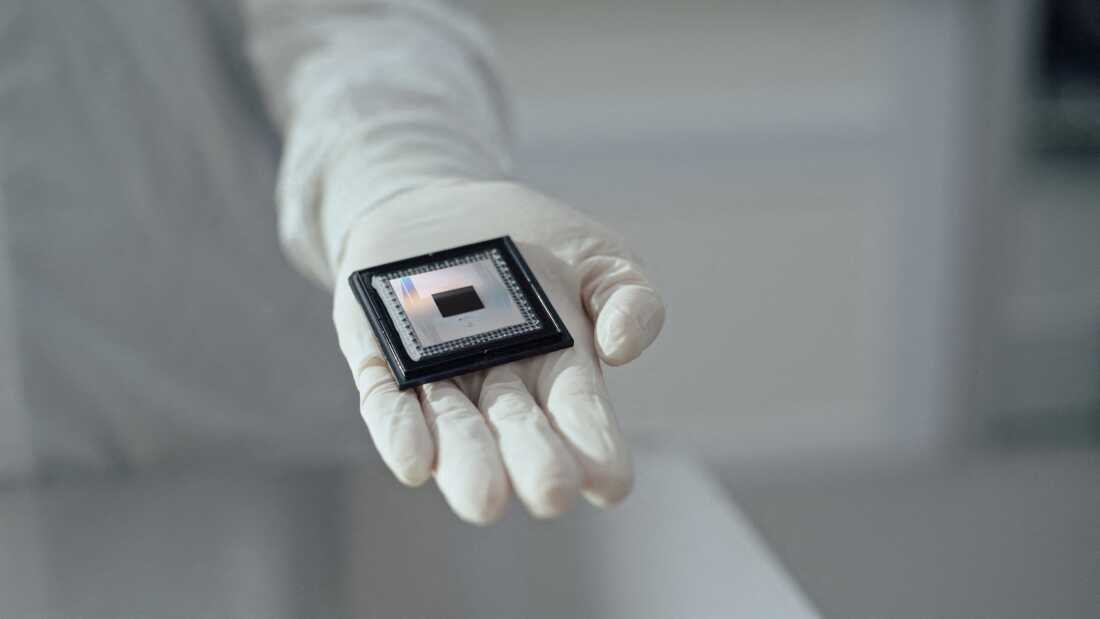

Ironwood is the result of Google’s ten-year, multi-billion-dollar investment in developing TPUs as a viable alternative to NVIDIA’s leading AI processors. These proprietary chips are exclusively available through Google’s cloud service or to its internal engineers. Google utilizes these chips to build and deploy its Gemini AI models. The manufacturer producing the Google-designed Ironwood processors remains undisclosed.

The launch of Ironwood underscores Google’s commitment to advancing AI computing capabilities. With its significant performance improvements and enhanced features, Ironwood is poised to play a critical role in Google’s AI initiatives, providing a robust platform for the development and deployment of AI applications.